Kristallnacht, or simply Pogromnacht, occurred 80 years ago on November 9-10, 1938. The Pogrom was…

Killer Apps: Developing a Code of Ethics for AI

It is clear that we are still in the early stages of AI adoption. It is also clear that like every other man-made tool, AI will be used for all sorts of purposes including the pursuit of power and profit. A set of guiding principles are therefore required to match the computational benefits of AI with humanity’s social goals.

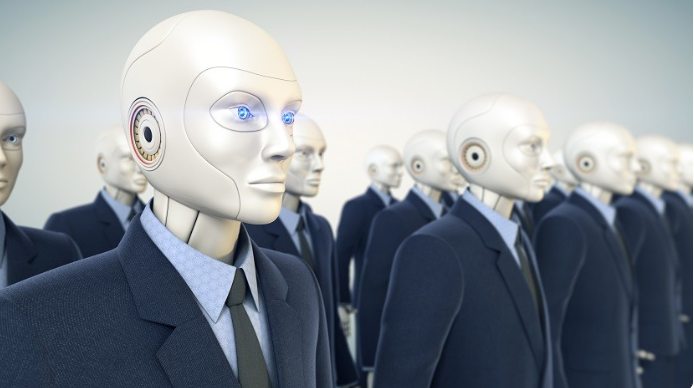

In 1942, science fiction writer Isaac Asimov created “The Three Laws of Robotics” as a means of limiting the risks of autonomous AI agents. These laws were a device to drive his storyline and eventually became a blockbuster movie in 2004 called I, Robot, starring Will Smith. But no scientist has figured out how to replicate these laws into a machine.

A non-profit institute founded by MIT cosmologist Max Tegmark, Skype co-founder Jaan Tallinn and DeepMind research scientist Viktoriya Krakovnahas worked with AI researchers and developers to establish a set of guiding principles, which are now referred to as the Asilomar AI Principles.

The Asilomar code of ethics mandates that the goal of AI research should be to create AI systems that are accountable to a competent human authority in order to assure that their goals and behaviors align with human values throughout their operation.

The problem with having a global code of ethics for AI is similar to the problem that scientists have with applying Asimov’s Three Laws. AI is a broad term for many loosely related but different technologies, so a common code would need to be so generic as to make its practical application virtually meaningless. Additionally, there are so many political and cultural approaches to privacy and ethics across the globe that it is unlikely that we could ever reach a consensus of what global standards are locally relevant.

A viable code of AI ethics that somehow reflects universal human values that can also be applied in different regions, regulatory environments, and industries seems an impossible challenge. The AI-genie is now portable, accessible and economical, and will not go back into the bottle.

All ethics involve context and interpretation. It is not possible for ethics to be strictly deduced from principles that can then be written as code. Learning systems are limited by the data on which they are trained, and by their ability to weigh trade-offs and dilemmas. Systems can go from super-smart to super-dumb in an instant. The same can be said of corporations.

Unlike corporations, humans do not have one clear objective. To deal with the tradeoffs and dilemmas that humans face when making decisions, economists created a fictional and simplified-version of a human called “Homo Economicus”—economic man. Issues like fairness, justice, and accountability can lead to logically valid yet contradictory conclusions that are confusing to economic man.

For the most part, corporations are not good at dealing with the real-world ethical challenges of the products and services they create. They use AI and private and personal data to make crucial decisions about medical treatments, probation, insurance, and lending eligibility: areas with a history of contributing to structural sources of societal inequities. But there remains considerable disagreement about whether data privacy and protection should be viewed more as a political right, or more like a social and economic right. The broader debate of the distinction between political and social rights is at the heart of whether the digital sovereignty of the state takes primacy over the data rights of the individual.

The rapid development of AI is forcing us to consider how to encode political, social and economic rights into the decision-making tools and structures of the future. Arguably, the potential impact of AI to destroy or elevate humanity is greater than any other technology before it. Is our ability to address age-old human values like fairness, freedom, or security, able to keep up with our ability to create more powerful technology?